It feels like magic. In the last few years, Artificial Intelligence has exploded into our lives, writing poetry, creating stunning art, and answering our most complex questions. To many, this “overnight” sensation, powered by models like ChatGPT and Gemini, seems to have appeared out of nowhere.

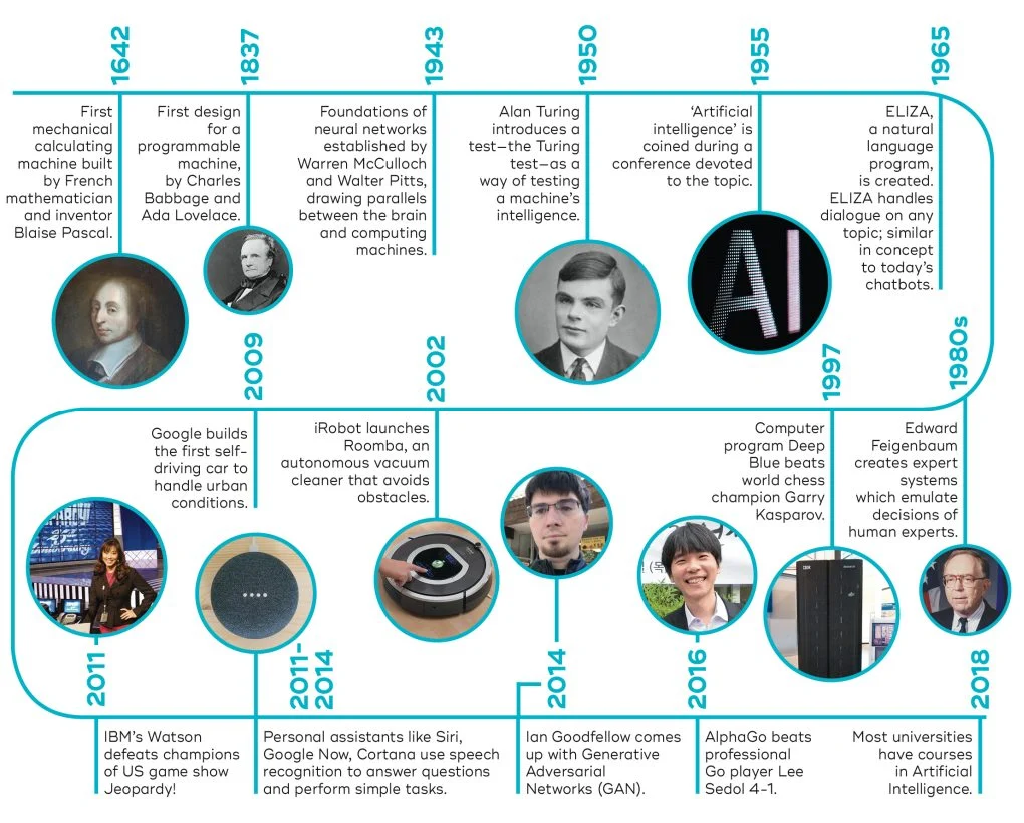

But AI wasn’t born yesterday. Today’s incredible achievements are built on the dreams, breakthroughs, and even bitter disappointments of nearly a century of research. To truly understand where we’re going, we need to see where we’ve been. Let’s take a journey through the defining eras of AI.

Era 1: The Ancient Dream & Theoretical Birth (Pre-1950s)

The dream of artificial beings is as old as human imagination. Ancient Greek myths told of Hephaestus, the god who forged living automatons from metal. Medieval legends spoke of the Golem, a creature of clay brought to life by magic. For centuries, intelligent machines were the stuff of fantasy.

In the mid-20th century, that fantasy began to merge with science. In 1943, Warren McCulloch and Walter Pitts proposed the first mathematical model of an artificial neuron, a “brain cell” made of logic.

The true philosophical launchpad, however, came from British mathematician Alan Turing. In his groundbreaking 1950 paper, “Computing Machinery and Intelligence,” Turing sidestepped the unanswerable question, “Can machines think?” Instead, he asked a practical one: Can a machine imitate a human so perfectly that you can’t tell the difference? This became the legendary “Turing Test,” giving the future field of AI its first grand challenge.

Era 2: The Golden Age of AI (1956 – 1974)

The field officially got its name at a summer conference at Dartmouth College in 1956. It was there that computer scientist John McCarthy coined the term “Artificial Intelligence.” The atmosphere was electric with optimism. The pioneers in that room believed that a machine with human-level intelligence was only a generation away.

This “Golden Age” saw a flurry of early successes. Researchers created programs that could solve algebra problems, prove mathematical theorems, and speak simple English. A famous early chatbot named ELIZA could even imitate a psychotherapist, captivating the people who interacted with it. The future seemed incredibly bright, and government funding poured into this promising new field.

Era 3: The First “AI Winter” (1974 – 1980s)

The early hype created impossibly high expectations. The reality was that the computers of the day were simply not powerful enough to handle the immense complexity of real-world problems. The initial, impressive demos in controlled environments couldn’t scale.

Promises were broken, and the mood soured. In 1973, a highly critical report in the UK known as the Lighthill Report dismissed the grand promises of AI, leading to massive funding cuts. This triggered the first “AI Winter,” a period of disillusionment where funding dried up and research slowed to a crawl.

Era 4: The Quiet Revolution of Machine Learning (1980s – 2000s)

Out of the AI Winter, a new approach slowly emerged. Instead of trying to program a machine with explicit rules for every situation, researchers focused on creating systems that could learn from data. This was the quiet rise of Machine Learning.

A key breakthrough was the popularization of the “backpropagation” algorithm in the 1980s, which finally gave neural networks a reliable way to learn. Progress was slow but steady, culminating in a spectacular public victory in 1997. IBM’s Deep Blue supercomputer defeated world chess champion Garry Kasparov. For the first time, a machine had beaten the best human player in a game of deep strategy and intellect. AI was officially back.

Era 5: The Deep Learning Explosion (2010s – Present)

The 2010s were the decade when everything changed. Three key ingredients came together to create a perfect storm:

- Big Data: The internet had created an unimaginably vast ocean of text, images, and videos for AIs to learn from.

- Powerful GPUs: The powerful processors (GPUs) originally designed for video games turned out to be perfect for the parallel computations needed to train massive neural networks.

- Algorithmic Breakthroughs: Researchers developed more sophisticated deep learning models.

The “Big Bang” of this new era happened in 2012. A deep neural network named AlexNet entered the ImageNet competition, a challenge to identify objects in photos. AlexNet didn’t just win; it shattered all previous records, proving the stunning power of deep learning.

The final piece of the modern puzzle arrived in 2017 when researchers at Google published a paper titled “Attention Is All You Need.” It introduced the Transformer architecture, a revolutionary new model design. This is the foundational technology that powers virtually all of the large language models (LLMs) captivating the world today, from GPT-4 to Gemini.

Conclusion: The Exponential Curve

The journey of AI has been a long and winding road, from ancient dreams to mathematical theories, through a freezing “winter,” and into the explosive spring of deep learning. While its history spans nearly a century, the pace of innovation is now accelerating at an exponential rate. The most dramatic chapters in the story of Artificial Intelligence are not in the past—they are being written right now.